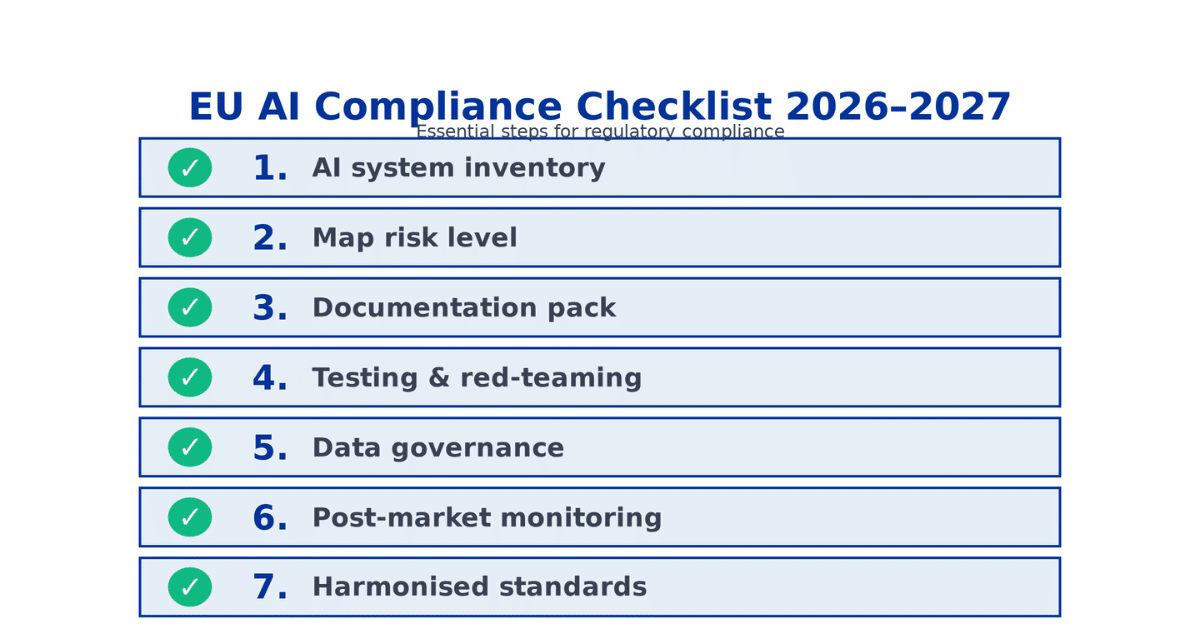

The EU AI compliance checklist 2026–2027 gives founders a practical map to prepare for the next wave of obligations—especially around high‑risk AI, transparency for generative systems, and post‑market monitoring. This builds directly on our EU AI regulation 2025 three-layer framework breakdown. Treat 2025–2027 as your build‑out window for documentation, testing, and governance.

Step 1: Build your AI system inventory

Create a live inventory of all models and features. For each, record purpose, users, data sources, training lineage, deployment surfaces, risk level, and current controls. Link each item to a product owner and a compliance owner. Tag anything that could be high‑risk or impact critical—see our Digital Omnibus GDPR AI training examples for data handling specifics.

Step 2: Map risk level and apply controls

Use the Act’s ladder to decide if a system is prohibited, high‑risk, limited‑risk, or minimal‑risk. For high‑risk candidates, implement risk management plans, quality thresholds, human oversight, robustness testing, and incident handling. For limited‑risk, ensure clear user disclosures and opt‑outs where relevant. Compare this to EU AI Act vs US AI EO approaches for global strategy.

Step 3: Documentation & transparency pack

Standardize dataset cards, model cards, and change logs. Prepare plain‑language transparency: what the AI does, limitations, human fallback, and contact for redress. For generative AI, enable synthetic content labeling and provenance. Keep a technical annex ready for authorities and auditors.

Step 4: Testing, red‑teaming, and thresholds

Define pre‑deployment test plans with acceptance thresholds for safety, bias, and performance. Run periodic red‑team exercises and record findings. Automate regression tests to catch drift. Document known failure modes and mitigations.

Step 5: Data governance and user rights

Document lawful basis for training and inference, plus DPIAs/PIAs for sensitive uses. Enforce data minimization, retention limits, encryption, and access controls. Build tooling for access, correction, deletion, objection, and appeals. For enterprise customers, honor contractual purpose limits and deletion SLAs.

Step 6: Post‑market monitoring

Set up monitoring pipelines for incidents, user complaints, and model drift. Define escalation paths and rollback triggers. Publish responsible AI updates with material changes. Maintain a lifecycle record tying releases to evaluations and fixes.

Step 7: Align to harmonised standards and audits

Track emerging harmonised standards and align artifacts early to avoid a last‑minute scramble. Prepare for potential AI Office engagement and national authority requests. Consider a readiness audit ahead of deadlines to validate gaps.

Execution tips for founders

- Assign a compliance PM to run the program alongside product.

- Keep everything in a single governance workspace (inventory, tests, DPIAs, docs).

- Pilot the full stack on one product line before scaling across the portfolio.

- Use feature flags and kill switches to reduce operational risk.

With this EU AI compliance checklist 2026–2027, you’ll be able to move fast, ship responsibly, and avoid bottlenecks as obligations phase in.