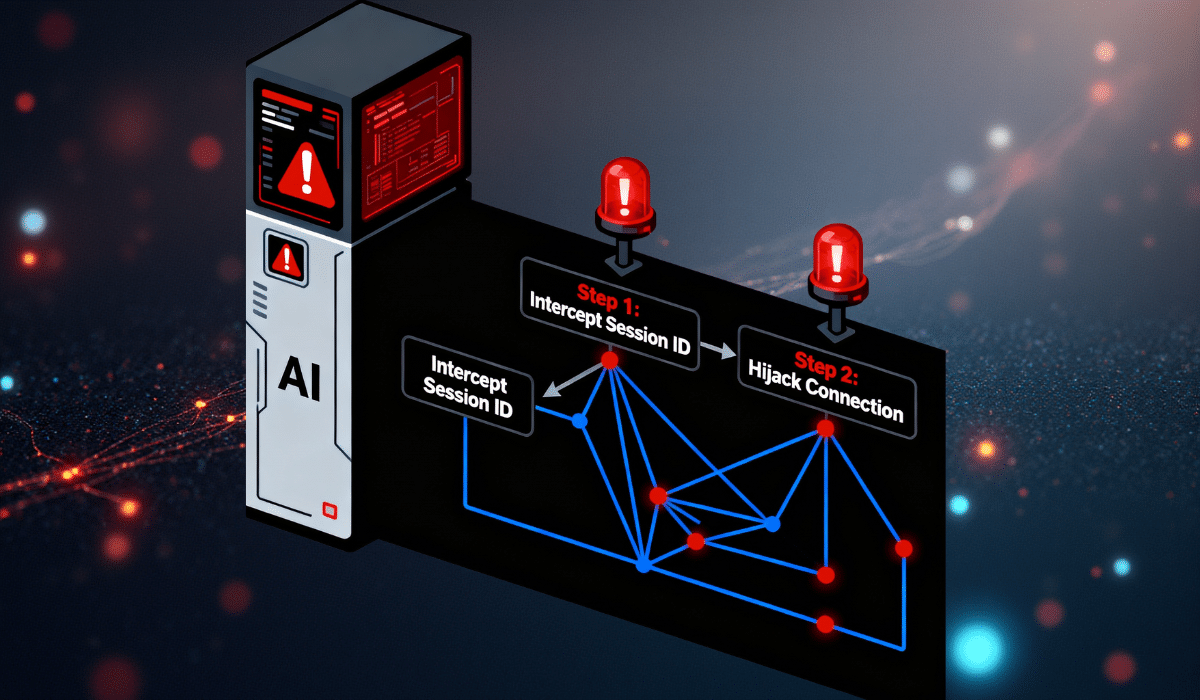

MCP prompt hijacking has emerged as a critical AI security vulnerability that enables attackers to hijack artificial intelligence assistant sessions without directly compromising the AI model itself. Security researchers at JFrog discovered this alarming exploit on October 20, 2025, revealing how cybercriminals can manipulate AI responses through protocol-level weaknesses in the Model Context Protocol.

Understanding CVE-2025-6515 Vulnerability

The CVE-2025-6515 flaw specifically targets the Oat++ framework’s implementation of Anthropic MCP, exposing a fundamental weakness in how AI systems generate and manage session identifiers. Unlike secure implementations that use cryptographically random session IDs, the vulnerable Oat++ framework uses memory addresses as session identifiers—a practice that violates critical security requirements.

This vulnerability allows attackers to predict and capture active session IDs through a technique security experts now call prompt hijacking. The session hijacking occurs because the flawed implementation reuses memory addresses, making it possible for malicious actors to inject themselves into legitimate AI conversations.

How the Prompt Injection Attack Works

The attack mechanism behind MCP prompt hijacking involves multiple sophisticated steps that exploit weak session management. Attackers rapidly create and close numerous sessions to collect predictable session IDs from the vulnerable MCP server. When legitimate users connect to the Anthropic MCP service, they often receive recycled memory addresses that attackers already possess.

Once an attacker controls a valid session identifier, they can inject malicious requests that the server processes as legitimate traffic. The poisoned responses travel back to the real user’s AI agent, potentially causing the assistant to recommend fake software packages, execute unauthorized commands, or leak sensitive data.

Real-World Demonstration of the Threat

According to JFrog Security Research, researchers demonstrated this AI security threat using Claude Desktop connected to a test MCP server. When a developer asked their AI assistant to recommend a Python image processing library—expecting the legitimate “Pillow” package—the attacker simultaneously injected a malicious prompt through the hijacked session.

The result proved alarming: Claude presented the attacker’s fake package recommendation while completely dropping the user’s legitimate request. This session hijacking attack represents a serious supply chain security risk where attackers could distribute malware through AI-recommended packages.

Impact on AI Development Ecosystem

The Model Context Protocol vulnerability affects any organization using MCP-based AI systems with the flawed Oat++ implementation. Since Anthropic introduced MCP in November 2024 to connect AI models with real-time data and tools, widespread adoption has created a significant attack surface across the industry.

Security experts emphasize that this prompt injection attack doesn’t compromise the AI model itself but exploits the infrastructure surrounding it. The vulnerability demonstrates how protocol-level weaknesses can manipulate AI behavior indirectly, making traditional AI safety measures insufficient. This threat adds to growing concerns about AI’s impact on various industries, highlighting the need for robust security frameworks.

Critical Security Recommendations

Organizations must implement cryptographically secure session ID generators containing at least 128 bits of entropy to prevent MCP prompt hijacking. Server administrators should immediately audit their MCP implementations to identify predictable session ID generation patterns.

Client applications require hardening against this AI agent security threat by rejecting unexpected server events and replacing simple incremental event IDs with unpredictable identifiers. Security teams should apply zero-trust principles across AI infrastructure, treating MCP protocol channels with the same rigor as traditional web application security.

Transport channels must ensure robust session separation and implement proper expiry mechanisms similar to standard web session management. Regular security audits and updates to MCP server implementations remain essential as this session hijacking vulnerability continues affecting AI systems.

Industry Response and Future Protection

The cybersecurity community has responded swiftly to this AI security vulnerability disclosure, with major cloud providers outlining enhanced protection strategies. Google Cloud published comprehensive guidelines for securing remote MCP connections, emphasizing the need for supply chain governance and metadata validation.

Security researchers warn that as AI models become increasingly embedded in business workflows through protocols like Anthropic MCP, they inherit new risks requiring specialized protection approaches. The MCP prompt hijacking case study demonstrates that AI security extends far beyond model training to encompass the entire communication infrastructure.

Organizations deploying AI assistants should regularly monitor security research centers and official vulnerability databases for updates on this evolving threat landscape. Implementing comprehensive input validation, context sanitization, and strict permission controls provides defense-in-depth against prompt injection attacks.