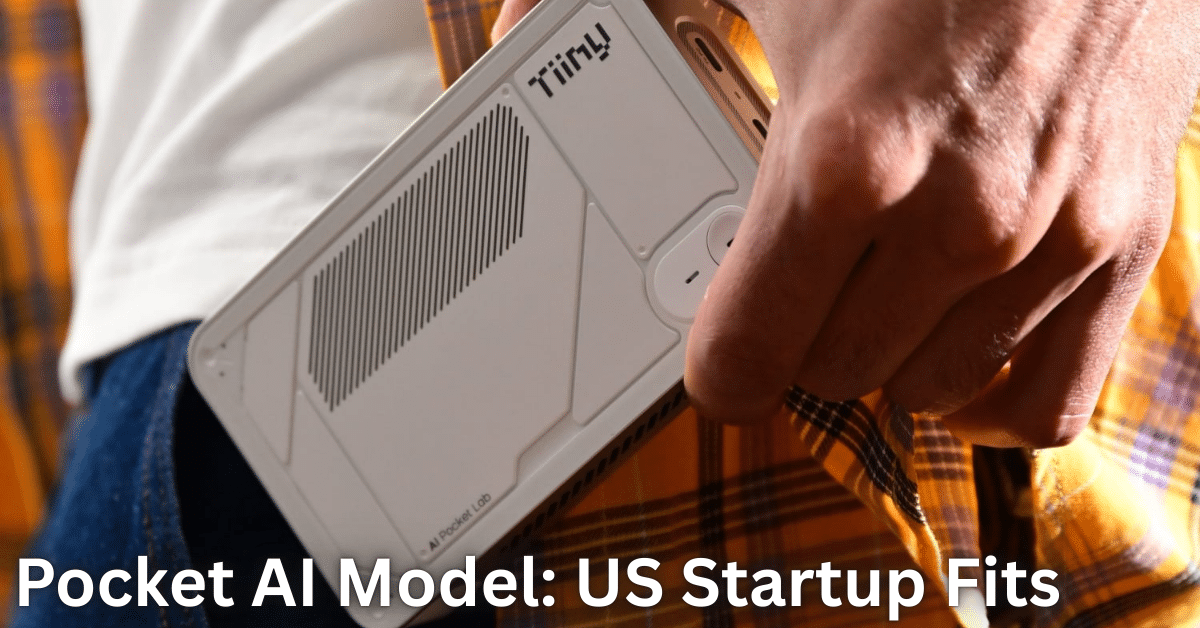

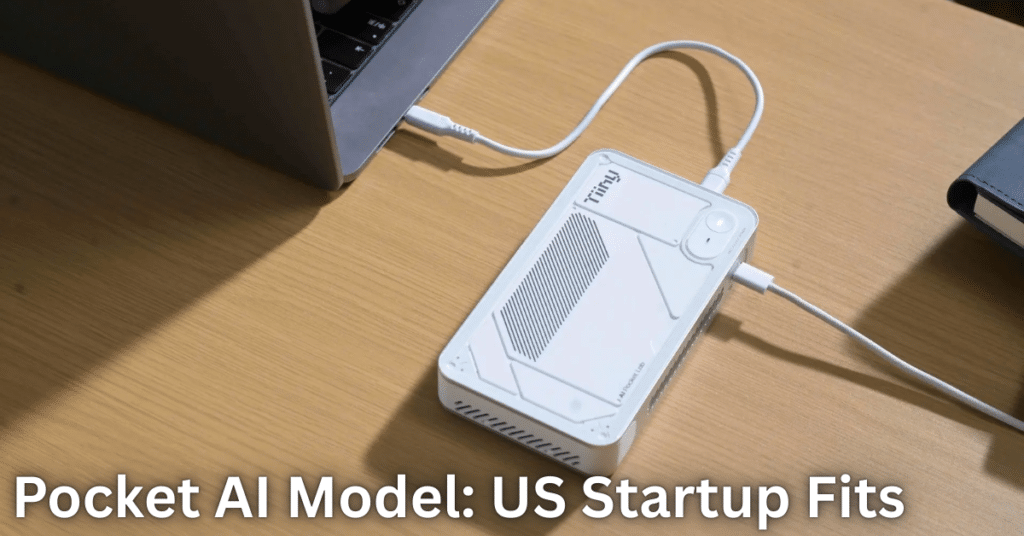

Pocket AI model technology just got a massive upgrade from a US startup called Tiiny AI. They claim their new device runs a full 120-billion-parameter AI model right in your pocket, with no need for cloud servers or fancy computers. This breakthrough matters because it puts powerful AI in your hands, literally. No more waiting for internet or worrying about data privacy – everything runs offline on a tiny device. The rest of this article explains how it works, what it means for you, and why this changes daily AI use forever.

Key Highlights

- Tiiny AI Pocket Lab is a palm-sized device (14.2 x 8 x 2.53 cm, 300 grams) that handles up to 120B parameters fully offline.

- It matches GPT-4o-level smarts for PhD-style reasoning, deep analysis, and complex tasks.

- Key tech: TurboSparse (smart neuron skipping) and PowerInfer (CPU-NPU teamwork) make it fast and low-power at 65W.

- One-click setup for open models like Llama, Qwen, Mistral, DeepSeek, Phi, and GPT-OSS.

- Supports AI agents such as ComfyUI, Flowise, OpenManus for creative workflows.

- Launching at CES 2026 with ongoing updates and hardware upgrades.

- Guinness World Record holder for smallest 100B+ LLM MiniPC.

How Pocket AI Model Technology Works

The pocket AI model from Tiiny AI changes everything by shrinking massive AI brains into something you carry daily. Traditional AI needs huge data centers, but this device uses clever tricks to run the same power on a chip.

TurboSparse skips less important brain parts during thinking, saving energy without losing smarts. PowerInfer splits work between your device’s brain (CPU) and special AI unit (NPU), hitting speeds that once needed thousand-dollar GPUs. Together, they handle the golden zone of 10B-120B parameters – perfect for 80% of real tasks like coding help, research, or chat.

You get on-device AI processing that feels instant. No lag, no bills, just pure local power. Imagine asking deep questions while offline on a train – this makes it real.

Why Size and Power Matter for Users

This isn’t just small tech; it’s built for your life. At 300 grams, it slips into any bag like a thick smartphone. Low-power AI means hours of use without draining batteries fast.

For creators and workers, it means freedom. Run quantized AI models for image generation or data crunching anywhere. Businesses save on cloud costs, while students get pro-level tools without subscriptions.

Tiiny AI Pocket Lab: Features That Help You

Tiiny AI focuses on ease, so you start fast. One-click installation loads top open-source models in seconds – no tech skills needed.

- Coding and reasoning: Handles multi-step problems like a PhD researcher.

- Creative agents: Tools like SillyTavern or Presenton for stories, art, or videos.

- Privacy first: All data stays on-device, encrypted like bank info.

- Future-proof: OTA updates add new models and speed boosts over time.

Real-World Uses for Everyday People

Think of a writer brainstorming plots with Llama offline during travel. Or a developer testing DeepSeek code without server delays. Even hobbyists run ComfyUI for AI art on the go.

Edge AI device shines in spots with bad internet, like remote work or flights. It cuts cloud dependency, dodging outages and high fees. For families, it’s safe – no sending kid questions to big tech servers.

Benefits of Mobile AI Hardware Like This

Pocket-sized AI opens doors others can’t. Here’s why it beats cloud setups:

| Feature | Cloud AI | Pocket AI Model |

|---|---|---|

| Privacy | Data sent online | 100% local, encrypted |

| Speed | Internet lag | Instant offline |

| Cost | Monthly fees | One-time buy |

| Power Use | High server energy | 65W efficient |

| Access | Needs Wi-Fi | Works anywhere |

| Size | Big computers | Fits in pocket |

This table shows clear wins for daily users. AI model compression makes huge models tiny without losing brains – a game-changer.

US AI Startup’s Vision for Your Future

Tiiny AI sees a world where AI feels personal, not corporate. Their AI inference chip tech pushes boundaries, targeting creators, researchers, and pros who need reliable power.

At CES 2026, expect demos of even bigger models. Continuous upgrades mean your device grows smarter over years. For Bhopal creators like you, this means portable tools for SEO content or AI image gen without cloud limits – check our OpenAI US AI leadership in 2026 for more trends.

Mobile AI hardware like this could spark a boom in local AI apps. Developers build faster; users gain control.

Challenges and What to Watch

No tech is perfect. Battery life during heavy 120-billion-parameter model runs needs testing. Heat in pockets might limit long sessions. But at 65W, it’s gentler than laptops.

Tiiny promises fixes via updates. Early adopters get the edge, shaping future versions. Watch TechCrunch for hands-on reviews and Ars Technica for deep tech breakdowns.

Why This Pocket AI Model Excites Everyone

Powerful AI now fits your life, not data centers. On-device AI processing means secure, fast help for work, learning, or fun. As low-power AI grows, expect more devices like this.

Ready for portable brains? Explore best AI tools for mobile or edge AI trends next. This pocket AI model proves big power comes small – your AI future starts now.

Why Pocket AI Model Creates Lasting Impact

Powerful AI now fits your life, not corporate data centers. On-device AI processing delivers secure, instant help for work, learning, creativity, or fun.

Three huge wins:

- Freedom: Create anywhere – flights, villages, power cuts

- Savings: End ₹50,000+ yearly cloud bills

- Privacy: Your business ideas stay yours forever

Low-power AI sparks a device revolution. Expect phones, glasses, even jewelry running quantized AI models by 2028.

Ready for portable AI brains? Explore best AI tools for mobile, edge AI trends, and build your pocket AI workflow today.